Overview

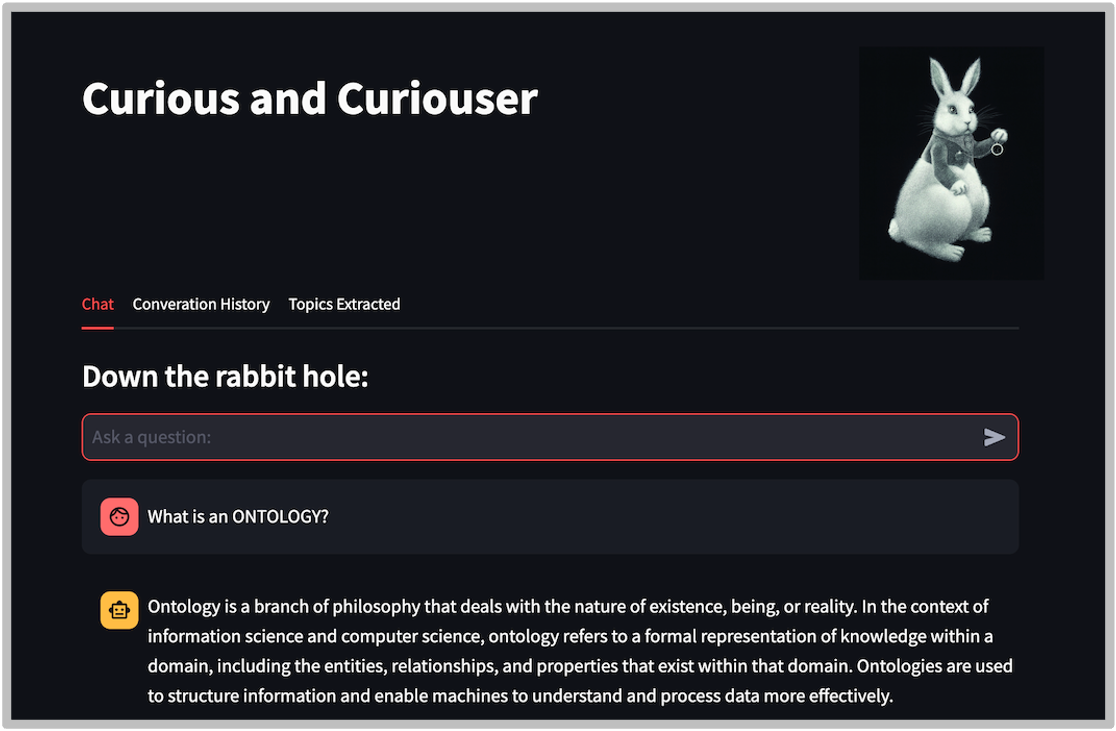

CodeConvo is a project born from my hands-on exploration of Large Language Models and modern web application development. It’s a Streamlit-based chat interface that not only facilitates conversations with various LLMs but also includes sophisticated conversation management and analysis features.

Audience

- Developers interested in building LLM-powered applications

- Data Scientists exploring conversational AI implementations

- Technical professionals seeking a customizable chat interface

- AI enthusiasts wanting to experiment with different LLM configurations

Description

CodeConvo transforms the way we interact with Language Models by providing a feature-rich, intuitive interface that emphasizes conversation management and analysis. What started as a personal tool for AI experimentation has evolved into a full-featured application with sophisticated state management and export capabilities.

Project Highlights

- Conversation Management

- Real-time streaming responses with async processing

- Auto-save functionality for session persistence

- Flexible conversation history management

- Topic extraction and analysis capabilities

- Technical Innovation

- Integration of multiple LLM providers

- Asynchronous response handling

- Stateful session management

- Dynamic configuration system

- User Experience

- Intuitive interface with real-time feedback

- Customizable display options

- Rich text formatting support

- Comprehensive export functionality

Core Skills

Tools & Technologies

- Frontend Layer

- Streamlit framework

- Custom HTML/CSS templating

- Application Layer

- Python 3.8+

- Async event processing

- Custom state management system

- Integration Layer

- LlamaIndex connector

- OpenAI API (GPT-3.5, GPT-4)

- Claude API integration (planned)

- Custom API wrappers

- Infrastructure Layer

- AWS EC2 instance

- Custom domain configuration

- SSL/TLS security

- Environment management

Technical Skillset

- Architecture Design

- Event-driven programming patterns

- Stateful application design

- Modular component architecture

- User Experience Engineering

- Real-time response streaming

- Dynamic state updates

- Interactive component design

- Data Engineering

- Conversation state persistence

- Topic extraction algorithms

- Message thread management

- System Integration

- API endpoint design

- Error handling strategies

- Rate limiting implementation

- DevOps Practices

- Deployment automation

- Environment configuration

- Performance optimization

Project Insights

Implementation Challenges

- Handling streaming responses efficiently

- Managing conversation state across sessions

- Implementing reliable auto-save functionality

- Designing an intuitive topic extraction system

Future Development

- PDF export capabilities

- Enhanced visualization options

- Additional LLM provider integrations

- Advanced analytics dashboard

Learn More:

GitHub Repository | Explore App