← Back to My Journey | Google Scholar (430+ citations)

Big Picture

Eye movements are such a natural and universal behavior that most people don’t ever think about how they happen or even THAT they happen. When you want to watch something, or drive somewhere, or when you want nothing at all- eye movements are happening constantly. In fact, people change their gaze several times per second, every waking hour of every day.

Our visual attention is intricately linked to (but not the same as) where our eyes are directed, like when driving a car and being careful to watch for pedestrians, bikes and pesky scooters.

Sometimes visual attention is “grabbed” by color, SHAPE, or any number of visual properties (good designers are experts at directing visual attention through a space, artwork, or webpage).

Other times, eye gaze may be deployed in a highly targeted fashion, such as looking for your friend among the crowd of park goers: you may not bother to look at the red balloons or other obviously non-friend items, but you may scrutinize the face of every silhouette that matches their familiar profile.

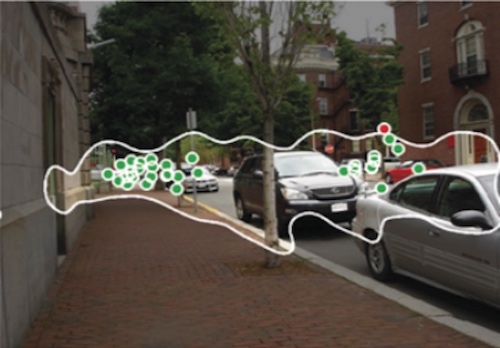

Where the eyes are looking and how long they linger in a particular spot are naturally occurring behaviors that can indicate which scene regions are visually or cognitively salient.

Research Questions

My work seeks to identify how an individual’s specific visual memory impacts their attention when performing a familiar task. Towards goal, much of my work involved recording people’s eye movements and comparing human gaze locations with different model predictions. Specific research questions:

- How do people combine bottom-up visual information and top-down scene knowledge to selectively deploy the eyes during a visual search task?

- How does context-specific learning (e.g. the familiar layout of one’s bedroom) influence eye movements and attention?

- Can computational models learn an individual’s-specific eye movement patterns and predict what regions of a scene or visual display will attract a person’s attention?

Publications

Person, place, and past influence eye movements during visual search. Hidalgo-Sotelo, B. & Oliva, A. (2010). In S. Ohlsson & R. Catrambone (Eds.), Proceedings of the 32nd Annual Conference of the Cognitive Science Society, (pp. 820-825). Austin, TX: Cognitive Science Society. Link

Modeling Search for People in 900 Scenes: A combined source model of eye guidance. , Hidalgo-Sotelo, B., Ehinger K.*,Torralba, A. & Oliva, A. (2009). Visual Cognition, 17(6): 945-978. Link

Why do we miss rare targets? Exploring the boundaries of the low prevalence effect. Rich, A., Kunar M., VanWert M., Hidalgo-Sotelo B., Horowitz T., & Wolfe J. (2008). Journal of Vision, 8(15):15, 1-17. Link

Human Learning of Contextual Priors for Object Search: Where does the time go? Hidalgo-Sotelo B., Oliva A.,& Torralba A. (2005). Proceedings of the 3rd Workshop on Attention and Performance at CVPR. Washington, DC: IEEE Computer Society. Link

Posters

Person, place, and past influence eye movements during visual search. Hidalgo-Sotelo, B., & Oliva, A. (August 2010). Cognitive Science Society Annual Meeting, Portland OR.

History repeats itself: A role for observer-dependent scene context in search. Hidalgo-Sotelo, B., & Oliva, A. (May 2010). Vision Sciences Society Annual Meeting, Naples FL.

Delaying initial saccade latency in familiar scenes improves search guidance. Hidalgo-Sotelo, B., & Oliva, A. (May 2008). Tufts Conference on Cognitive Neuroscience of Visual Knowledge: Where vision meets memory. Boston MA.

Look before you leap: Lengthening initial saccade latency in familiar scenes improves search guidance. Hidalgo-Sotelo, B., & Oliva, A. (May 2008). Vision Sciences Society Annual Meeting, Naples FL.

Do rare features pop out? Exploring the boundaries of the low prevalence effect. Rich, A., Kunar, M., Van Wert, M., Hidalgo-Sotelo, B., & Wolfe, J. (May 2007). Vision Sciences Society Annual Meeting, Sarasota FL.

Decomposing the effect of contextual priors in search: Where does the time go?. Hidalgo-Sotelo, B., & Oliva, A. (May 2006). Vision Sciences Society Annual Meeting, Sarasota FL.

What happens during search for rare targets? Eye movements in low prevalence visual search. Rich, A. N., Hidalgo-Sotelo, B., Kunar, M. A., Van Wert, M. J., & Wolfe, J. M. (May 2006). Vision Sciences Society Annual Meeting, Sarasota FL.

Rapid Goal-Directed Exploration of a Scene: The Interaction of Contextual Guidance and Salience. Kenner N., Hidalgo-Sotelo B., & Oliva A .(May 2005). Vision Sciences Society Annual Meeting, Sarasota FL.